How to Connect LlamaIndex with Private LLM API Deployments

When your enterprise doesn't use public models like OpenAI

Introduction

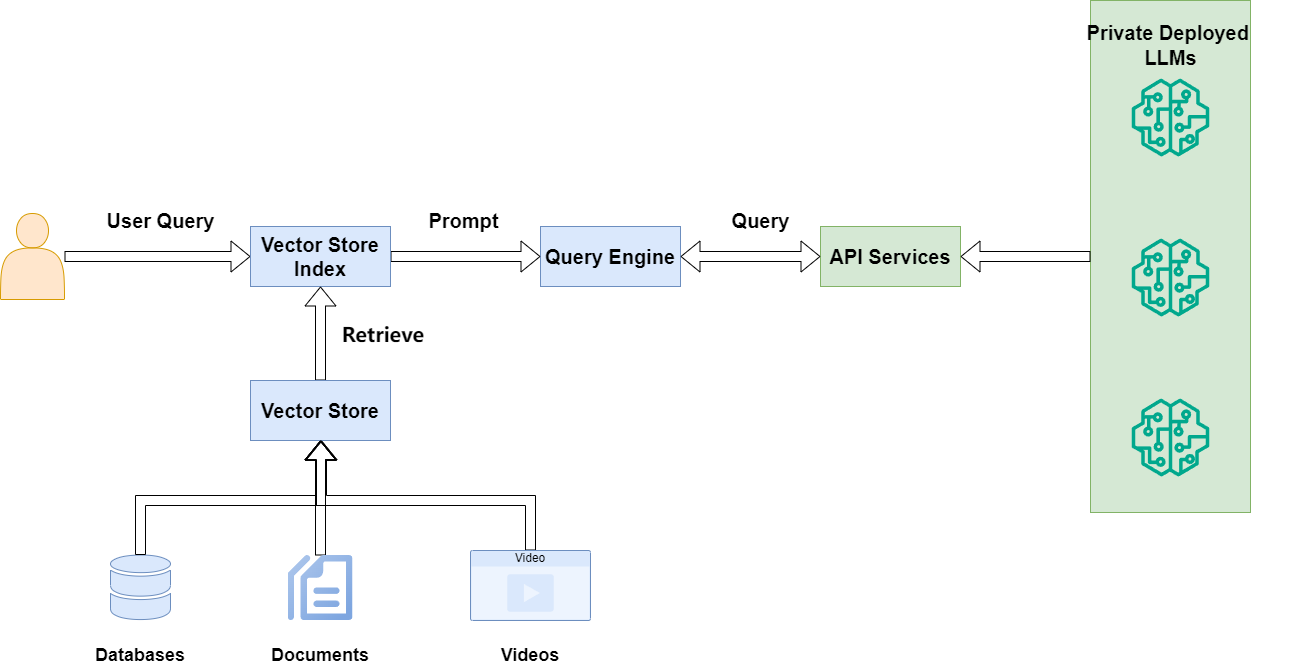

Starting with LlamaIndex is a great choice when building an RAG pipeline. Usually, you need an OpenAI API key to follow the many tutorials available.

However, you might face these situations:

- Your company can only use privately deployed models due to compliance.

- You're using a model fine-tuned by your data science team.

- Legal restrictions prevent your company's private data from leaving the country.

- Other reasons that require using privately deployed models.

When building enterprise AI apps, you can't use OpenAI or other cloud providers' LLM services.

This leads to a frustrating first step: How do I connect my LlamaIndex code to my company's private API service?

To save your time, if you just need the solution, install this extension:

pip install -U llama-index-llms-openai-likeThis will solve your problem.

If you want to understand why, let's continue.

Why Install an Extra SDK?

LlamaIndex uses OpenAI as the default LLM. Here's a sample code from their website using DeepLake as the vector store:

from llama_index.core import VectorStoreIndex, SimpleDirectoryReader

documents = SimpleDirectoryReader("./data/").load_data()

vector_store_index = VectorStoreIndex.from_documents(documents)

vector_query_engine = vector_store_index.as_query_engine(similarity_top_k=k, temperature=temp, num_output=mt)

def index_query(input_query: str) -> tuple:

response = vector_query_engine.query(input_query)

print("LLM successfully generated the desired content")

index_query(user_input)I've removed other code parts - this is just for the demo. You can find the complete code on their website.

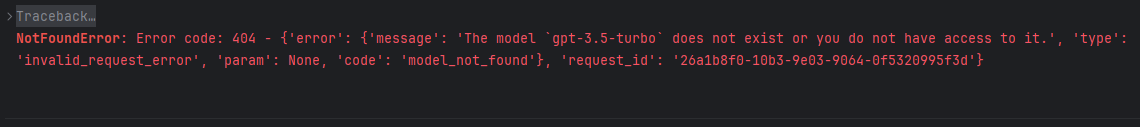

If you haven't subscribed to OpenAI, you'll get this error:

NotFoundError: Error code: 404 - {'error': {'message': 'The model `gpt-3.5-turbo` does not exist or you do not have access to it.', 'type': 'invalid_request_error', 'param': None, 'code': 'model_not_found'}, 'request_id': '26a1b8f0-10b3-9e03-9064-0f5320995f3d'}

This is the first error you'll face when deploying to production.

Let's see how to fix this when using a private Qwen-max service.

Try LlamaIndex's OpenAI Class

Our MLops team prefers tools like vllm or sglang to deploy Qwen services. For compatibility, Qwen models support OpenAI's API format.

This sounds good. Let's check the docs to see if we can use LlamaIndex's OpenAI class.

Note: To specify which LLM class to use, set the Settings.llm property.

from llama_index.llms.openai import OpenAI

Settings.llm = OpenAI(

model="qwen-max",

is_chat_model=True

)You must set OPENAI_API_KEY and OPENAI_API_BASE environment variables to your company's values.

Let's try running the code:

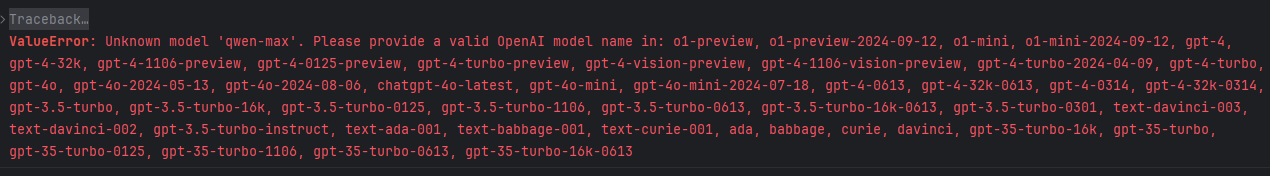

ValueError: Unknown model 'qwen-max'. Please provide a valid OpenAI model name in: o1-preview, o1-preview-2024-09-12, o1-mini, o1-mini-2024-09-12, gpt-4, gpt-4-32k, gpt-4-1106-preview, gpt-4-0125-preview, gpt-4-turbo-preview, gpt-4-vision-preview, gpt-4-1106-vision-preview, gpt-4-turbo-2024-04-09, gpt-4-turbo, gpt-4o, gpt-4o-2024-05-13, gpt-4o-2024-08-06, chatgpt-4o-latest, gpt-4o-mini, gpt-4o-mini-2024-07-18, gpt-4-0613, gpt-4-32k-0613, gpt-4-0314, gpt-4-32k-0314, gpt-3.5-turbo, gpt-3.5-turbo-16k, gpt-3.5-turbo-0125, gpt-3.5-turbo-1106, gpt-3.5-turbo-0613, gpt-3.5-turbo-16k-0613, gpt-3.5-turbo-0301, text-davinci-003, text-davinci-002, gpt-3.5-turbo-instruct, text-ada-001, text-babbage-001, text-curie-001, ada, babbage, curie, davinci, gpt-35-turbo-16k, gpt-35-turbo, gpt-35-turbo-0125, gpt-35-turbo-1106, gpt-35-turbo-0613, gpt-35-turbo-16k-0613

OPENAI_API_BASE to our company endpoint but still got GPT-related errors. Image by AuthorStrange - I pointed OPENAI_API_BASE to our company endpoint but still got GPT-related errors.

Unlike LangChain, LlamaIndex's OpenAI class checks the model_name in metadata to handle different model features. It forces you to use GPT family models. So this class won't work with other models.

Try OpenAILike Instead

As mentioned earlier, we can use the openai-like extension to connect to our API service. Let's read the API docs (which are quite hidden):

The docs say:

"OpenAILike is a thin wrapper around the OpenAI model that makes it compatible with 3rd party tools that provide an openai-compatible API.

Currently, llama_index prevents using custom models with their OpenAI class because they need to be able to infer some metadata from the model name."

This explains why we can't use custom models with the OpenAI class, and why OpenAILike solves the problem.

Let's update our code and try again:

from llama_index.llms.openai_like import OpenAILike

Settings.llm = OpenAILike(

model="qwen-max",

is_chat_model=True

)You must set the is_chat_model parameter to True, otherwise, you'll get a 404 error. Or there might be other random issues.

If you need to use the function_calling API or develop an agent, you need to set is_function_calling_model=True.

Bingo - no errors and the LLM is connected.

I've written another article that thoroughly explains the integration solutions for privately deployed LLMs, along with support for some new features. You can click here to learn more:

Conclusion

When trying to use LlamaIndex in enterprise RAG pipelines, I struggled to connect to private LLM services. Despite lots of Googling, no tutorial explained how to solve this.

I had to dig through LlamaIndex's API docs to find the answer. That's why I wrote this short article - to help you solve this quickly.

My team is just starting to build LLM apps in finance. I hope to discuss various challenges with you. Feel free to leave comments - I'll reply soon.

Next, we will embark on a journey to explore the LlamaIndex Workflow: